Whatever’s Next - 360° Ambisonic Video Project

Whatever’s Next is an audio/visual experience utilizing ambisonic sound technologies and 360° video creations in order to document the uniquely challenging and unprecedented nature of the time during which it was created in the midst of the COVID-19 worldwide pandemic. This project features an entirely virtual environment rendered in the Unity game engine including 3D models created in Maya, 3D map data build in Blender, 3D room scans taken using Display.land, 360° ambisonic recordings, synthesized audio made using Logic Pro, and a collection of voice recordings collected through social media.

There are two videos below. The first is the project’s documentation video that shares some of the process of creating the project and gives some context to its themes. The second is the final 360° video deliverable. We recommend experiencing the second video using a mobile device or VR headset and a set of headphones.

Documentation Video

Project Video - 360° Video with Ambisonic Audio

Whatever’s Next was created by Alec Lloyd, Sam Proctor, Hector Solis, & Andrew Johns at Arizona State University's Herberger Institute of Design and the Arts as a part of the school of Arts, Media, & Engineering's Fall 2020 Digital Culture Showcase.

The following was taken from the Whatever’s Next Project Documentation created by Alec Lloyd, Sam Proctor, Hector Solis, & Andrew Johns.

The Team

Sam - Worked to place the various 3D assets into a Unity scene, eventually animating a virtual camera to pass through the environment and record the frame-by-frame animation used for the final video. He coupled this animation with the ambisonic soundtrack and exported the finished piece.

Hector - Created and textured the 3D Models that are in the scene, worked with Maya and Blender to create them and then exported them as fbx and sent to shared google Drive where they can be downloaded and imported into Unity.

Andrew - Worked on editing and processing the interview audio we collected, as well as working in Logic Pro X to design synths for and arrange the ambient soundtrack and voice clips that accompanied the visuals.

Alec - Contributing in the initial design process/ideation phase, documenting the different stages of our process through written, photo, and video, collecting video interview footage from willing participants through social media, and producing and editing each of the video submissions for the project, including the project update and final documentation video.

Inspirations, motivations and/or goals of your work.

The nature of our project’s themes have changed a good deal since we first set out to create something at the beginning of the semester. In the beginning we were interested in creating something that spoke to the ever complicating issues of data security, private vs public data, and online presence. At the time, we looked for inspiration in pieces that brought awareness to privacy issues while letting the viewer make their own judgments, like the artwork of Kyriaki Goni. Pieces like “Privacy Sessions” (Goni) and “Deletion Process_Only You Can See My History” (The Glass Room) really embodied this idea, with the artist putting their personal data out there (location data in the first piece, search history in the second) in a way that provoked thought. Due to the circumstances as they evolved midway through the semester we determined that we would shift our focus to the coronavirus pandemic and its effects on each of us as we experience social distancing in this digital age.

Our inspiration for that theme came from the reality that we were all being forced to live as distant and only virtually connected members of society, and to that end we looked for other pieces that tackled a similar theme. What we found was Blast Theory, an artist group in the UK that had a couple different pieces that spoke to experiences of global pandemics. One entitled “Spit Spreads Death”, was a parade held in remembrance of an ill-advised parade in Philadelphia held during the 1918 influenza epidemic, which was responsible for a major outbreak in the city, and the other, entitled “A Cluster Of 17 Cases”, is a scale model of a hotel in Hong Kong thought to be the epicenter for the 2003 SARS outbreak (Blast Theory). Ultimately, we wanted to create something like these pieces: a lens to look back on the outbreak through once it was all over. For the technical aspects of the project, such as wanting to pursue a virtual environment (originally intended for VR) and ambisonic audio integration we drew inspiration from artists such as Björk (Vulnicura VR), Muse (Microsoft), and our own mentor Garth Paine who had created visual and audio experiences that added additional dimensions to the way that music and art could be experienced.

Our goal was to create a fully virtual environment that took elements of the world that we were living in to encapsulate our experiences. We wanted to create a piece of content that would somehow capture this unique time and the different feelings that were being experienced during the COVID-19 pandemic.

Describing the process of making and the final outcome of your project.

The 3D Models were created using Maya and Blender, the assets like the warehouse, fence, etc were made in Maya, For the google captures of things like the main Tempe ASU campus were made by using Blender, a plugin for it named MapModeller, and a program named RenderDoc. The method for taking these captures and bringing them into Blender was created by Élie Michel.

After acquiring a number of 3D models, we worked to piece these elements together inside of the Unity engine. The scene’s main camera position was automated over a period of about two and a half minutes so as to move through the level, capturing 360 degree stereoscopic frames.

In order to create an animation which had these VR capabilities, a script was added on the main camera which, upon running the program, would create two stereo cubemaps placed in an over-under format, that would capture the scene as a 4K image sequence which could then be recognized by Adobe Premiere as VR footage.

We then sought to render a fully ambisonic soundtrack to pair with our animation. To create our soundtrack, we worked between two separate digital audio workstations, Logic Pro X, and Reaper.

We used Logic Pro X to program and compose the ambient synthesizers heard during the video and also to arrange the audio files we gathered from recordings of people talking about their experience with the quarantine. Those audio files were pre-processed in Adobe Audition to cut them down for time and remove noise, and then placed in the final session. The finished product was bounced out as a series of separate tracks for final processing.

Each sound file was processed through encoders inside Reaper to be converted into an “ambiX” ambisonic format. This format consists of four channels which, when decoded into a binaural format, provide compelling audio spatialization.

With both our animation and our soundtrack rendered, we used Adobe Premiere to piece the two together. Premiere’s VR presets provided an efficient method of properly encoding our material into a file which could then be recognized by YouTube.

Challenges and abandoned iterations, did you achieve your goals? Why or why not? of what you did and how.

Our project saw a number of challenges, iterations, and abandoned goals throughout the semester, as we struggled towards a clearer vision of what we wanted to do, and then were faced with adapting this vision to the changing circumstances around us.

Although we initially shared a common interest in developing a virtual reality musical art piece, we struggled to first specify what this would entail, and to then come together on a concise and developed theme to follow. In keeping with our original goal of developing a “VR sound museum,” our first iteration involved an Oculus Quest setup in which users would see themselves escorted down a virtual museum hallway on a floating platform, with various sounds pinned to objects in the scene. The platform had, at this stage, held a certain precedence, as it would be the driving force of the experience, allowing us to overcome the locomotion constraints that come with VR design. We had even considered constructing a physical platform to match the virtual one to further drive this home. At a later point, the virtual platform was swapped for a wicker basket which would carry the participant along a river. This prototype we developed marked the last which would involve any Oculus integration, or platform for that matter.

Subsequently, we sought other means of conveying the experience we had hoped to deliver. We turned to more accessible forms of immersive content, such as 360 degree videos, given our equipment limitations. Our challenge then became to create this content so as to maintain our original conceptual framework.

Most of the challenges that we faced with the final iteration of the project happened to be more on the technical side of the process rather than on the creative side. The biggest problem that we came across when it came to the technical process was how to interface everything we were creating in a way that made the building process as smooth as possible. In the end, we found that for most things that we had to create, they had to be created separately and then brought into the main program for assembly (primarily Unity). Some of the challenges that we faced for the 3D models was the import and export of them, the creation of them was really not the problem, but when exporting them either from Maya or Blender into unity thats were the problems arised, (i.e. textures not transferring/applying, models missing components). Some other problems we faced were determined on how the piece was going to look in its final state, how the audio components blended and complemented the environment. We feel that we did achieve our goals with the project, and from the feedback that we got from the showcase it sounded like people were able to see what our intent for the project was as well as its meaning.

Possible future iterations (if you were to keep working on it from here, what would you do?)

Some iterations that I would make to it, are probably to increase the fidelity of the 3D Models, increase the amount of 3D Models we have to show in the video, go back into making VR, introduce more sound elements into the scene, increase length of video (if in video form), and overall just refine it more than what it is right now since things could always be improved.

What kind of feedback did you receive from others on your project?

After putting in so much work and doing our best to bring something to the Digital Culture Spring 2020 Showcase that we were happy about sharing we were pleased to receive a lot of really positive and helpful feedback from faculty and peers alike.

One of the aspects of the project that we were most excited to share was the ambisonic audio track accompanying the 360-degree video. In creating this element of the project we spend a good amount of time consulting with and learning from Professor Garth Paine, who was our faculty mentor. After experiencing our project through a headset that fitted with his mobile device Garth shared his compliments with us regarding the audio spatialization aspect of the video. He told us that he enjoyed getting to hear what we had put together and was impressed that we were able to execute what he had shared with us. Additionally, he shared with us some tips about how we might make the experience even more effective by tweaking some of the technical parameters within the game engine we used. We appreciated very much the input from our professor who served as an expert counsel for this project.

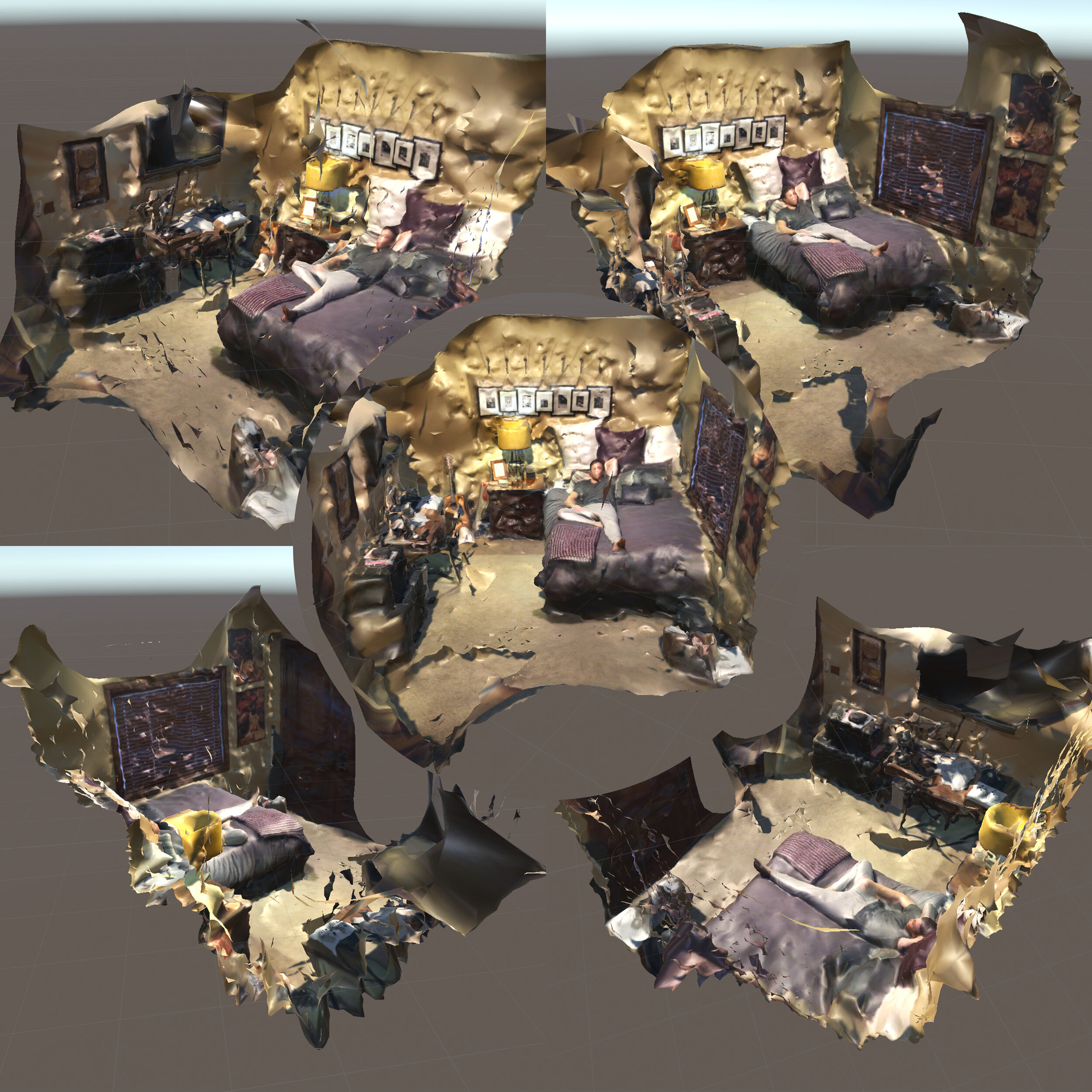

Other kinds of feedback that we received had to do with how the project and the experience of the visuals combined with the audio effectively conveyed and spoke to the theme of the project, which was the COVID-19 pandemic and living in this modern condition of social distancing. There were a number of visitors to our showcase Zoom meeting who mentioned that they thought that the portions of the video where you soar over Tempe and the section of stitched together bedrooms were very reflective of the current situation. The 3D renderings of the bedrooms were received particularly well and there was a lot of discussion about how it represented our current connected-only-by-digital-means state. Similar to how we were all speaking to one another from our own dwellings during our Zoom showcase, many recognized the parallels drawn between that reality and the virtualized connected bedrooms.

Overall, we were incredibly happy with the reception of our project. We felt like it was entirely positive and useful feedback. It was an incredibly enjoyable experience getting to share our hard work with so many of our instructors and peers despite the unexpected and norm-altering circumstances.

Annotated Bibliography:

ASU Department of Psychology. (2018). Let's talk about "Awe". YouTube. Retrieved 5 May 2020, from https://www.youtube.com/watch?v=1o2XkcftsMQ.

Michelle Shiota, associate professor in ASU’s Department of Psychology, describes the emotion of awe and its psychological implications. For centuries, artists and designers have addressed the emotion of awe through a variety of means. Churches, cathedrals and other places of worship evoke religious feelings of awe often by the grandiosity and holiness of its design. Awe can generally be described as “a feeling of reverential respect mixed with fear or wonder.” I think we are capable of creating spaces and experiences that can invoke this sense of awe, or at the very least, sweep people off their feet in some way. Some spaces that come to mind are those that feel infinite, like being inside a Pink Floyd album cover, or even uncanny like observing animated creatures walking around you.

Blast Theory. (2020). A Cluster of 17 Cases. Blast Theory. Retrieved 5 May 2020, from https://www.blasttheory.co.uk/blast-theory-17-cases/.

A piece involving a scale model of the 9th floor of the Metropole Hotel in Hong Kong which was the epicenter of the 2003 outbreak of SARS. The piece also has an audio component: a fictional first person account based on one of these “17 cases” and an interview with the coordinator for the WHO’s official response in 2003.

Blast Theory. (2020). Spit Spreads Death. Blast Theory. Retrieved 5 May 2020, from https://www.blasttheory.co.uk/projects/spit-spreads-death/.

An exhibit and parade in honor of the 100-year anniversary of the influenza pandemic that killed thousands, and especially to bring light to the Liberty Loan Parade in Philadelphia in 1918, which gathered 200,000 people to an open-air parade down Broad Street. Within 72 hours, this gathering had caused a spread of the disease so rapidly that all Philadelphia hospitals were full. The march involved cards with names of individuals who died from the flu, and involved a music piece played through marchers’ phones and using their lights for effect.

Fleisher. (n.d.). Lyvyatanim Sono Binaural Experience . Retrieved from https://sono.livyatanim.com/

This music video brings the viewer/listener into a web-based virtual space with 360 degrees of video and sound. The dark, cave-like space is encompassed by a dome-shaped ceiling upon which stars, asteroids and celestial bodies can be seen floating around. Resultantly, the viewer is guided through the music composition through mixed reality visuals, with the added ability to change their perspective. Our project could benefit by considering how we might also guide our listeners through the composition we end up creating. In the case of this project, the narrative seems to center around themes related to space and marvel. Given our set of tools, which are not limited to 360 degree cameras and ambisonic microphones, a similar approach to VR music would likely be achievable.

Goni, Kyriaki. “Privacy Sessions.” Privacy Sessions, Kyriaki Goni, Athens, Greece, https://kyriakigoni.com/portfolio/privacy_sessions.html.

This piece by Kyriaki Goni is one that combines the ideas of data, privacy, and nostalgia into one succinct package (though not in VR). The artist used their Google Maps data combined with personal memories to list out the methods of transportation for that day, which when combined with the photo/map of the location and a descriptive title, work together to create a simple and yet endlessly complex narrative for each day.

G-Dub. (2017). Paving The Road To Awe (VR Reactions). YouTube. Retrieved 5 May 2020, from https://www.youtube.com/watch?v=KvWqhkq-9bg.

This montage includes a collection of reactions to awe-inducing virtual reality experiences, mostly from elderly and disabled people, but among others as well, like those far away from their loved ones. Virtual reality has proven itself to be an incredibly potent medium - one that can elicit extremely intense reactions in many individuals. By its nature it’s meant to engross you in a different place, and convince your senses of a space which really exists. This can be handled in a variety of ways, but I believe that in our design of our virtual spaces, we should seek to provoke a meaningful and self-reflective reaction among participants, one that might impress, or leave a lasting memory in some fundamental way - by its size, its realism, or its surrealism.

IoT Art: Networked Art - Listening Post. (2019, November 1). Retrieved from https://www.postscapes.com/networked-art/

Listening Post by Mark Hansen and Ben Rubin has to do with themes of online presence and virtual communication. Some of the inspiration that we draw from this project includes ideas about how we represent ourselves and how we are represented in virtual ways. To us it seems to speak to vulnerability when it comes to the representation of a person’s personal data.

“Created by Mark Hansen and Ben Rubin Listening Post is an installation that pulls text fragments in real time from thousands of chat rooms, bulletin boards and other public forums online. The text is then displayed across a suspended grid of screens and sung or spoken by a voice synthesizer. The art is ‘a visual and sonic response to the content, magnitude, and immediacy of virtual communication.’"

La Camera Insabbiata: 沙中房間. (n.d.). Retrieved from http://lacamerainsabbiata.org/en/

“La Camera Insabbiata is a virtual reality work by Hsin-Chien Huang and myself in which the reader flies through an enormous structure made of words, drawings, and stories. Once you enter you are free to roam and fly. Words sail through the air as emails. They fall into dust. They form and reform.”

Microsoft. (2019). Muse brings their latest album to life with VR. YouTube. Retrieved 5 May 2020, from https://www.youtube.com/watch?v=uQv3hMh55F4.

A short video where the band Muse explains the ideas behind the VR games that they helped create and soundtrack as part of the tour experience for their album “Simulation Theory”. Another example of a conventional artist jumping into the VR space, though in this case these games are actually games as opposed to the “experience” model we ended up going for.

Museum of Arts and Design. (2019, September 14). Sonic Arcade: Shaping Space with Sound. Retrieved February 10, 2020, from https://madmuseum.org/sonic-arcade-shaping-space-with-sound

This exhibit at the Museum of Arts and Design in New York focuses on the physical aspect of sound, letting people play with sound as more of a physical media that affects the environment of the space it's in. This exhibit shows off the physical & spatial aspect of sound that I think we're going for with the idea of a VR 'sound museum', where the sound being generated informs the listener of the environment.

Nothing To Be Written. Retrieved February 11, 2020, from https://canvas-story.bbcrewind.co.uk/nothing-to-be-written/

The “Nothing to be written” is VR artwork that was inspired by the field cards that were sent home by soldiers in WW1, in which they were only allowed to write their signature and the phrases that the military allowed. This piece explores the human connection and or stories of these postcards and the time and circumstances in which they were written and sent.

Sinclair, F. (2016, August 20). The VR Museum of Fine Art on Steam. Retrieved February 10, 2020, from https://store.steampowered.com/app/515020/The_VR_Museum_of_Fine_Art/

This is a more literal example of how the structure of our project might play out; in this case the developer is using VR to give people a chance to interact with already existing famous works of art, but we would be using a similar idea to convey what a person's data might look and sound like if it were placed in a similar setting.

Sounding circuits: audible histories.

(n.d.). Retrieved fromhttp://sethcluett.com/works/sounding-circuits-audible-histories/

Seth Cluett produced an 8-channel cube of loudspeakers in order to create a 360 degree space of sound through ambisonics. The gallery in which it was hosted, called Sounding Circuits, looked at the early efforts to create digital sound – and the often unexpected means that composers used to make their music audible. The sound cube was meant to engross the listener into a reproduction of classical music performances as they would have been heard in the time they were written. The idea of a speaker array has been discussed as effectively immersive, but technically challenging. The concept, however, of hosting a sphere of sound in a particular location in VR is attractive at this point.

Sumra, H. (2017, May 9). How we can use VR to relive memories, and how it changesthe past. Retrieved from https://www.wareable.com/vr/vr-re-living-memories-9393

This article by Sumra questions the preservation of sentimental memories using VR. Thomas Wolosik, who runs a wedding photography service, began employing the use of 360 degree video for his clients. Despite the limitations of the technology, which curb one’s ability to fully re-experience a given memory, his clients found the documentation to be particularly insightful with their own recollection of events, as they could revisit the experience over and over, each successive time, taking away something new. For our project, we might consider what happens when something from the past is re-lived in VR. This is a complicated matter, as every person has memories and experiences unique to their own lives. Perhaps, however, there are common memories or experiences which can be replicated through immersive sounds and visuals. It would be interesting to see what sorts of stimuli may be more effective for evoking nostalgia, or a new perception of the past.

The Glass Room. “Deletion Process_Only You Can See My History.” The Glass Room, Tactical Tech, 16 Oct. 2019, https://theglassroom.org/object/goni-deletion_process_only_you_can_see_my_history

This is another piece by Goni that emphasizes the act of putting private data in a public space; giving people a chance to understand firsthand the importance of their ability to control (and delete) their data. Making something as continuously interactive as this installation would be unlikely to work in VR, but the concept can serve as a jumping off point for what we want to accomplish.

Vulnicura VR. Windows VR Version, 2019.

A VR album experience created by Björk; this was one of the first sources we found when we were looking for examples of a VR album, which was the original concept our group formed around. Though it had technical issues, this was one of the first big leaps into the VR/ambisonic space by a more conventional musician.